Introduction

AWS Glue is a fully managed, serverless data integration service that simplifies the process of preparing and transforming data for analytics. It’s designed to handle ETL (Extract, Transform, Load) operations, making it easier to move data between various sources, catalog it, and execute complex transformation tasks.

Understanding AWS Glue pricing is crucial for managing costs effectively. This pricing guide helps you navigate the costs associated with using AWS Glue services for data processing, crawlers, and metadata management. With its pay-as-you-go model, AWS Glue allows users to pay only for the resources they consume. This model offers flexibility but requires careful cost management to avoid overspending.

By optimizing your usage and leveraging cost-saving strategies, you can make the most of AWS Glue’s capabilities while keeping expenses in check. Dive deeper into each component’s pricing in this guide to ensure efficient and cost-effective use of AWS Glue. For more insights on cloud expense management and other related topics, feel free to explore our blog.

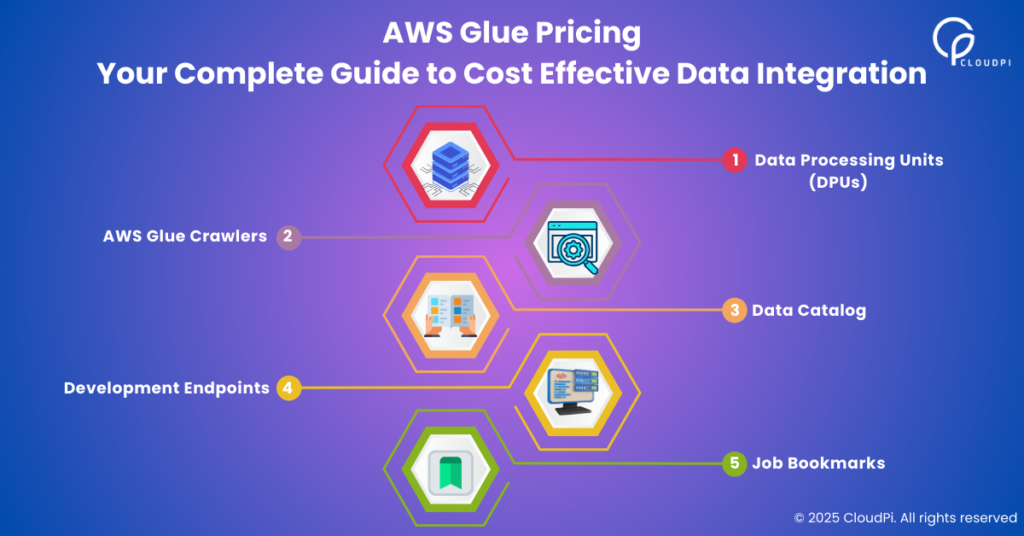

Understanding AWS Glue Pricing Components

1. Data Processing Units (DPUs)

AWS Glue pricing revolves significantly around Data Processing Units (DPUs), a core component that drives the service’s cost structure. DPUs are integral to the functionality of AWS Glue, providing the computational power necessary to execute ETL (Extract, Transform, Load) operations.

Definition and Function

In AWS Glue, a DPU consists of 4 vCPUs and 16 GB of memory. This combination ensures that your data processing tasks have sufficient resources to handle various workloads efficiently. DPUs facilitate not only traditional ETL jobs but also interactive sessions, offering flexibility in how you manage and transform data.

Pricing Details for ETL Jobs and Interactive Sessions

The cost model for using DPUs in AWS Glue is based on hourly usage. For running ETL jobs, the standard rate is approximately $0.44 per DPU-hour. This rate applies to different job types within AWS Glue, such as Spark Streaming Jobs or Python Shell Jobs. When engaging in interactive sessions for development purposes, the billing remains consistent at $0.44 per DPU-hour with the convenience of second-based billing and a minimum duration of one minute.

Comparison Across Job Types

Understanding the pricing nuances across various job types is crucial for effective cost management. For instance, while Spark Streaming Jobs might incur costs similar to standard ETL jobs due to their reliance on DPUs, Python Shell Jobs could offer a different pricing dynamic based on their specific resource requirements. Recognizing these differences can aid in selecting the most cost-effective approach for your data integration tasks.

Efficient utilization of DPUs can significantly impact your AWS Glue expenditure. Monitoring DPU usage closely helps avoid unnecessary costs while ensuring optimal performance during data processing activities. By aligning your workload needs with appropriate DPU allocation, you can harness AWS Glue’s capabilities effectively without incurring excessive expenses.

Understanding how DPUs function within AWS Glue sets the foundation for exploring other components like crawlers and data catalogs, each contributing uniquely to your overall cost structure.

2. AWS Glue Crawlers

AWS Glue Crawlers are essential for data management as they automate the process of discovering and cataloging data from various sources. These crawlers scan your data, determine its structure, and populate the AWS Glue Data Catalog, making it easier to efficiently manage metadata assets. This process is crucial for maintaining an up-to-date view of your data landscape, which can greatly impact the effectiveness of ETL jobs and interactive sessions.

How AWS Glue Crawlers Work

- Scanning: Crawlers scan your data stored in different locations such as Amazon S3, databases, or data lakes.

- Inferring Schemas: They analyze the scanned data to understand its structure and format.

- Populating Data Catalog: The inferred schemas and metadata information are then stored in the AWS Glue Data Catalog.

This automated process saves time and effort compared to manual data discovery methods.

Cost Implications

Crawlers are charged based on their usage of Data Processing Units (DPUs), similar to ETL jobs. The pricing is structured at an hourly rate with a minimum billing duration of 10 minutes, ensuring that you only pay for what you use. This flexibility allows you to manage costs effectively while leveraging the full capabilities of AWS Glue Crawlers.

To optimize your budget, it’s vital to understand how AWS Glue pricing is structured around components such as DPUs and crawlers. Factors like DPU-hour charges for crawlers should be factored into your overall AWS Glue Pricing Guide to prevent unexpected expenses while maximizing the benefits of this serverless data integration service.

One effective strategy to achieve this is by leveraging FinOps for maximum cloud efficiency with platforms like CloudPi. Such cutting-edge cloud management tools enable effective FinOps practices that significantly enhance cloud efficiency.

Additionally, understanding the complexity of cloud billing can further aid in cost optimization. CloudPi simplifies AWS, Azure, and GCP invoices, streamlining your cloud billing with automated cost tracking and a unified dashboard. This not only simplifies multi-cloud management but also facilitates efficient cost allocation and usage optimization for better savings.

Incorporating these strategies into your data management approach can yield significant benefits, including enhanced operational efficiency and substantial cost savings. For more insights on cloud automation, cloud analytics, or expert consulting services in these areas, consider exploring our comprehensive resources available under our consulting category.

3. Data Catalog

The Data Catalog in AWS Glue is a centralized place where you can store and manage metadata for your data assets. It is essential for keeping track of information about your data, such as its location and structure, across various sources like Amazon S3 and Amazon Redshift.

Importance of Data Catalog

The Data Catalog plays a crucial role in the following ways:

- Organizing Metadata: It helps you organize and maintain metadata efficiently, making it easier to find and access information about your data assets.

- Integration with ETL Jobs: The Data Catalog seamlessly integrates with ETL (Extract, Transform, Load) jobs, allowing you to define data transformations and workflows based on the metadata stored in the catalog.

- Streamlining Data Processing Workflows: By providing a centralized repository for metadata, the Data Catalog facilitates smoother data processing workflows, enabling faster and more efficient data operations.

Pricing Model

AWS Glue’s pricing model for the Data Catalog includes free storage for the first one million metadata objects. This provision helps users start managing their metadata without incurring immediate costs. However, once this limit is exceeded, additional charges apply. The cost is $1.00 per 100,000 objects per month beyond the free tier.

Cost Management Tips

Understanding these storage limits and associated costs is vital when planning your data integration strategy using AWS Glue. Here are some tips to manage expenses effectively:

- Monitor the number of metadata objects stored in the Data Catalog regularly.

- Identify any unused or unnecessary metadata objects that can be deleted to reduce costs.

- Optimize your ETL processes by reusing existing metadata whenever possible instead of creating new objects.

By implementing these cost management strategies, you can ensure that resources are allocated efficiently and expenses are kept under control while benefiting from AWS Glue’s comprehensive data cataloging capabilities.

4. Development Endpoints

Development endpoints in AWS Glue are essential for interactive development and testing of ETL code. They provide a convenient environment for debugging and refining your data transformation logic before it goes live. This flexibility ensures that your ETL jobs are reliable and optimized.

Cost Structure

The cost of using development endpoints is based on Data Processing Units (DPUs) used during development activities. Each endpoint utilizes DPUs to allocate computational resources such as vCPUs and memory, similar to regular ETL jobs. Therefore, you will be charged for the DPUs consumed while using development endpoints, making it crucial to manage your usage wisely.

Pricing Model Insights

Understanding the pricing model for DPU usage can help you plan your development activities effectively. Here are some key points to consider:

- ETL Jobs and Interactive Sessions also depend on DPU pricing, highlighting the importance of optimizing resource allocation across different AWS Glue components. This aligns with broader cloud resource allocation strategies that can lead to significant cost-saving opportunities.

- The interactive nature of these endpoints allows billing by the second with a minimum duration charge, providing flexibility for short-term tests or iterative development cycles.

Optimizing Costs

As you optimize your AWS Glue strategy, keep in mind how development endpoints impact overall costs and ensure they align with your workflow requirements.

5. Job Bookmarks

AWS Glue job bookmarks are essential for managing ETL jobs as they track processed data and enable incremental data processing. These bookmarks allow you to continue ETL tasks without reprocessing data unnecessarily, saving time and resources. By keeping track of previously processed datasets, job bookmarks facilitate efficient handling of ongoing data ingestion.

Cost considerations for using job bookmarks

The cost considerations for using job bookmarks revolve around their ability to optimize resource utilization. AWS Glue pricing is based on different components such as Data Processing Units (DPUs), so the efficient use of DPUs directly affects overall costs. Job bookmarks help reduce DPU usage by eliminating unnecessary processing, making them an important part of a cost-effective ETL strategy.

When planning your AWS Glue operations, it’s important to understand how job bookmarks impact DPU pricing. This understanding can lead to significant savings, especially in large-scale data environments. By effectively using job bookmarks, you ensure that incremental ETL tasks are managed efficiently, reducing both runtime and associated expenses.

Considering cloud billing in AWS Glue operations

In addition to DPU pricing, it’s crucial to consider the broader context of cloud billing when optimizing your AWS Glue operations. For example, comparing different API integration solutions like Amazon API Gateway and MuleSoft can provide further insights into cost-saving strategies. By exploring various aspects of cloud billing, you can uncover additional opportunities for optimizing your overall cloud expenditure while ensuring the efficiency of your ETL processes.

Additional Costs to Consider When Using AWS Glue

Using AWS Glue involves more than just the core service expenses. It’s essential to account for additional costs from related AWS services:

1. Amazon S3 Storage Costs

Data processed or stored using AWS Glue often resides in Amazon S3, a popular cloud storage service known for its scalability and reliability. The size and frequency of data storage can significantly impact your overall expenditure. For instance, minimizing GET requests can help reduce Amazon S3 costs. Monitoring and optimizing your S3 usage is crucial to avoid unnecessary charges.

2. Cloud Watch Logs

ETL jobs and other operations in AWS Glue produce logs that are stored in Amazon CloudWatch. While these logs provide valuable insights for monitoring and debugging, they come with associated fees based on data ingestion and storage. Regularly reviewing log levels and retention settings can help keep these costs manageable.

Understanding the relationship between AWS Glue and these ancillary services is vital for accurate cost estimation. By considering these factors, you can better plan your budget and optimize resource allocation across your data integration processes. Additionally, exploring various strategies for cloud cost optimization could further enhance your budgeting efforts when utilizing services like AWS.

Cost Optimization Strategies for AWS Glue Users

1. Leveraging Free Tiers Effectively

Understanding how to leverage free tiers can significantly reduce your costs when using AWS Glue services. AWS offers various free tier benefits that allow you to experiment and manage data without incurring significant expenses, especially when you’re just starting.

Key Free Tier Benefits for AWS Glue

- Metadata Storage: AWS Glue provides a generous free tier for metadata storage. The first million metadata objects stored in the AWS Glue Data Catalog are free of charge each month. This benefit is particularly useful if you’re managing a moderate amount of data and can help keep your costs low as you scale up your operations.

- Requests: Take advantage of the free requests offered by AWS for the Glue Data Catalog API. By optimizing how and when you make these requests, you can avoid unnecessary charges that might accrue through frequent API interaction.

- Development and Testing: Use the free tier to test and develop ETL jobs without committing to full-scale deployments. This approach allows you to fine-tune your pipelines and ensure efficiency before moving into higher usage levels where costs apply.

Example: Using Free Tiers in Practice

Imagine you’re setting up a new data pipeline with AWS Glue to process sales data from an e-commerce platform. Start by cataloging your data in the Glue Data Catalog, which is cost-free for the first one million entries. During development, utilize the free tier for conducting initial tests on small datasets, ensuring that your ETL processes are optimized before scaling up.

This strategic use of the free tier not only minimizes costs but also ensures that you’re making informed decisions about resource allocation as your project grows.

Monitoring your usage against these free tiers becomes essential. Implement DPU monitoring best practices to track your resource consumption effectively. Regular reviews can highlight areas where optimizations can be made, allowing you to adjust workloads or select more efficient processing methods like using Parquet or ORC file formats.

Incorporating these cost management techniques within your workflow not only reduces expenses but also promotes sustainable growth in line with best practices outlined in any comprehensive AWS Glue Pricing Guide. As you continue using AWS Glue services, keeping an eye on cost-saving opportunities will ensure efficient resource utilization aligned with your business objectives.

For those considering a broader strategy involving multiple cloud services, exploring options like cloud migration could provide additional flexibility and cost efficiency. Additionally, understanding multi-cloud costs can further enhance your overall cost optimization strategies.

2. Using Efficient Data Formats Like Parquet or ORC To Lower Read/Write Costs

Optimizing data formats is a crucial cost management technique for AWS Glue users. Utilizing efficient data formats such as Parquet and ORC can significantly lower read/write costs during data processing.

Advantages of Parquet Format:

- Columnar Storage: Parquet is designed for columnar storage, allowing for efficient querying and data compression. This reduces the amount of data scanned, resulting in lower processing costs.

- Compression Efficiency: Offers high compression ratios, minimizing the storage footprint and subsequently lowering associated expenses on services like Amazon S3.

Benefits of ORC Format:

- Optimized Row Columnar (ORC): Stores data compactly by compressing both the values and the structure, enhancing read performance while minimizing storage requirements.

- Predicate Pushdown: Allows for filtering data at the storage level, decreasing the amount of data that needs to be processed and thus cutting down on resource utilization.

Adopting these formats aligns with best practices for minimizing expenses while using AWS Glue services effectively. By optimizing job runtimes and monitoring DPU usage through these cost-effective formats, users can take full advantage of free tier benefits within AWS Glue’s pricing model.

Common Cost Pitfalls to Avoid When Using AWS Glue Services

Avoiding unnecessary expenses in AWS Glue requires careful consideration of potential pitfalls:

1. Over-provisioning Resources

Allocating more Data Processing Units (DPUs) than needed can significantly inflate costs. Ensure you accurately estimate the necessary resources for your ETL jobs by analyzing historical job performance and scaling appropriately.

2. Inefficient Formats

Using inefficient data formats can lead to higher read/write costs. Formats like JSON or CSV, while common, may not be optimal for large-scale data processing. Opt for efficient formats such as Parquet or ORC to reduce data size and improve processing speed, which ultimately lowers costs.

Strategies to mitigate these issues include regularly reviewing your resource allocation against job requirements and conducting cost analyses to identify optimization opportunities. Implementing these practices ensures effective cost management while maintaining the performance of your data integration tasks.

Conclusion

Understanding AWS Glue pricing is crucial for effective data integration management. This AWS Glue Pricing Guide has provided insights into the various components influencing costs, from Data Processing Units to Data Catalog and Crawlers.

By applying cost optimization strategies, you can leverage AWS Glue services efficiently:

- Utilize free tiers for metadata storage and requests.

- Opt for efficient data formats like Parquet or ORC.

- Avoid common pitfalls such as over-provisioning resources, which can be addressed through cloud resource right-sizing.

This comprehensive approach not only ensures cost-effectiveness but also enhances your ability to manage data integration tasks seamlessly within AWS Glue. Your journey towards optimized data processing starts with informed decisions. Embrace these strategies to balance performance and budget effectively.

Moreover, it’s essential to consider the broader picture of cloud resource management. For instance, adapting to the needs of multi-tech environments may require a shift in your approach, as discussed in our blog post about transforming multi-tech giants.

In addition, if you’re part of a team that manages projects involving cloud resources, you might find it beneficial to explore roles such as a test manager for cloud or a UI designer who specializes in cloud applications. These positions often provide valuable insights into making the most out of your cloud resources while ensuring optimal performance.